In today’s dynamic market, making informed marketing decisions is crucial for success. A/B testing empowers businesses to optimize their marketing campaigns by providing a data-driven approach to understanding consumer behavior. This method allows marketers to compare two versions of a marketing element, such as a webpage, email, or advertisement, to determine which performs better. By analyzing the results of A/B testing, marketers can make smarter marketing decisions and achieve higher conversion rates, increased engagement, and improved return on investment (ROI).

This article delves into the world of A/B testing and its applications for smarter marketing decisions. We will explore the key principles of effective A/B testing, including hypothesis formulation, experiment design, and data analysis. From website optimization to email marketing and social media campaigns, we will examine how A/B testing can be leveraged to enhance various aspects of your marketing strategy. Discover how this powerful technique can transform your marketing efforts and drive significant business growth by enabling smarter marketing decisions.

What Is A/B Testing?

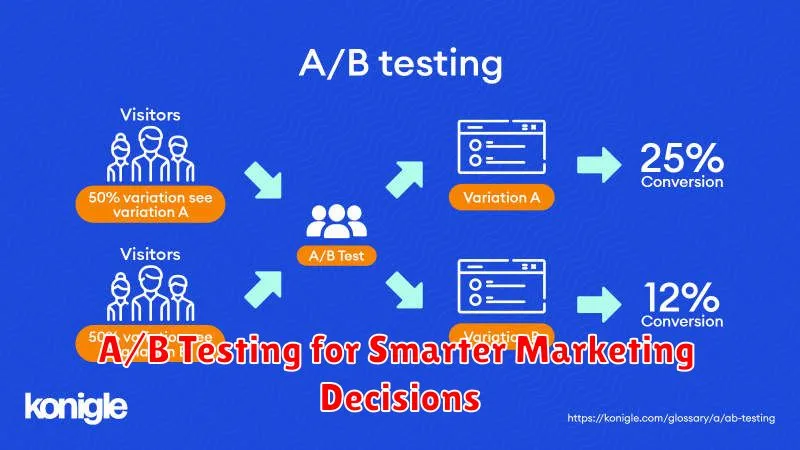

A/B testing, also known as split testing, is a method of comparing two versions of a webpage, email, or other marketing asset to determine which performs better. This is done by randomly showing different versions (A and B) to similar groups of users and tracking key metrics such as conversion rates, click-through rates, or engagement time. The version that yields the desired outcome is declared the “winner” and then implemented.

The process typically involves identifying a goal, for example, increasing sales or sign-ups. Then, a variation of the existing webpage or marketing material (the “control,” or A) is created. This “challenger” version (B) includes a change to one specific element, such as a different headline, call-to-action, or image placement. The performance of both versions is then carefully measured and statistically analyzed to determine if the change in version B resulted in a significant improvement.

A/B testing is a powerful tool for data-driven decision-making and allows businesses to continually optimize their marketing efforts. By testing different variations, companies can gain valuable insights into user behavior and preferences, ultimately leading to increased effectiveness and return on investment (ROI).

When Should You Use It?

Determining the appropriate time to utilize something depends heavily on its intended purpose and the specific context. Careful consideration of factors such as need, availability, and potential impact are crucial. For instance, a specialized tool should be employed only when the task at hand necessitates its unique capabilities. Using it inappropriately can lead to inefficiency or even unintended consequences. Assessing the situation and weighing the pros and cons beforehand is essential for making an informed decision.

Understanding the limitations of a particular resource is also paramount. Whether it’s a piece of equipment, a specific skill, or a financial investment, knowing its boundaries prevents overuse or misuse. Overreliance on a single solution can create vulnerabilities and limit adaptability. A diverse approach, incorporating various options and strategies, often yields more robust and sustainable results.

Ultimately, the decision of “when” comes down to strategic thinking. Aligning the use of resources with overall goals and objectives maximizes effectiveness. This involves evaluating the immediate needs as well as the long-term implications. By carefully considering the context, limitations, and desired outcomes, you can ensure the optimal use of any resource.

Planning a Controlled Test

A controlled test, also known as a controlled experiment, is a scientific method used to investigate the relationship between variables. It involves manipulating an independent variable while keeping all other potential influencing factors, known as control variables, constant. This isolation allows researchers to observe and measure the effect of the independent variable’s changes on a dependent variable, thus drawing conclusions about cause and effect.

Careful planning is crucial for a successful controlled test. This includes precisely defining the research question and hypothesis, selecting appropriate experimental and control groups, and meticulously outlining the procedures for manipulating the independent variable and measuring the dependent variable. Standardized procedures are essential to minimize bias and ensure the reproducibility of the results.

Data analysis in controlled tests often involves statistical methods to determine the significance of the observed changes. This helps researchers to confidently attribute any observed effects to the manipulated independent variable rather than random chance or other uncontrolled factors. Rigorous planning and execution of controlled tests are paramount for producing reliable and valid scientific findings.

Choosing What to Test

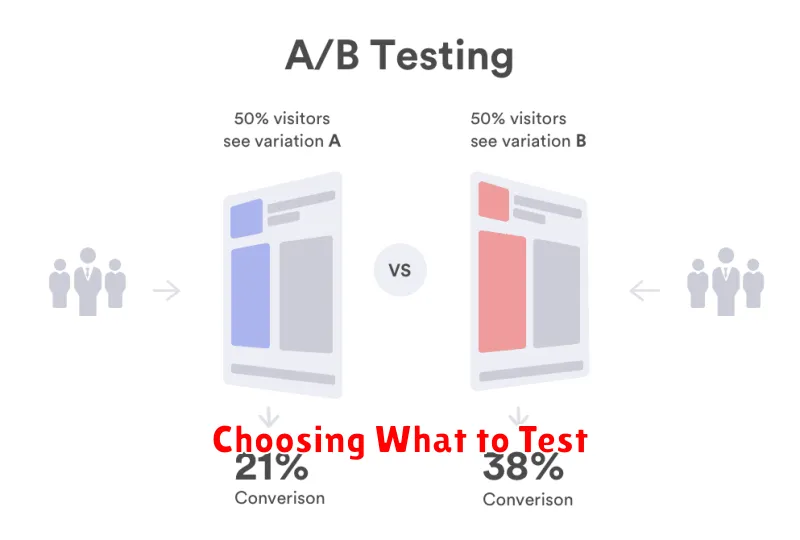

Selecting the right elements for testing is crucial for maximizing impact and minimizing wasted effort. Prioritize testing features with the highest risk of failure, those with the greatest impact on users, and components that are new or have undergone recent changes. Consider factors such as complexity, dependencies on other systems, and the frequency of use.

Testing should also encompass various levels, from unit testing individual components to integration testing interactions between modules and system testing the application as a whole. User acceptance testing (UAT) is essential for verifying that the software meets business requirements and user expectations. Choosing the appropriate testing level depends on the development phase and the specific goals of the test.

Effective test selection also involves considering the available resources, including time, budget, and personnel. It’s important to balance thoroughness with practicality, focusing on areas that provide the greatest return on investment in terms of risk mitigation and quality improvement.

Best Practices for Email Tests

Testing your emails is crucial for ensuring they render correctly across various email clients and devices, and that your messaging effectively reaches your target audience. Thorough testing should encompass design rendering, link functionality, deliverability, and content accuracy. Start by testing with a diverse range of popular email clients like Gmail, Outlook, and Apple Mail, as well as different devices including desktops, tablets, and smartphones. Pay close attention to how images display, that links direct to the correct pages, and that your subject lines and preheader text are optimized.

Consider A/B testing key elements of your email campaigns to optimize performance. This involves creating two slightly different versions of an email (A and B) and sending them to separate segments of your audience to determine which performs better. You can test subject lines, calls to action, email content, send times, and even “from” names. Analyze the results, focusing on metrics like open rates, click-through rates, and conversions to identify which version resonates more with your audience. Implement the winning variation in your future email campaigns.

Before sending any email, always conduct a final proofread. Check for typos, grammatical errors, broken links, and ensure the accuracy of any dynamic content. Sending an email with mistakes can damage your brand’s credibility. Consider using a checklist to ensure you cover all essential aspects before hitting the send button. This final review will help maintain a professional image and maximize the impact of your email communications.

Landing Page Split Testing

Landing page split testing, also known as A/B testing, is the process of comparing two or more versions of a landing page to determine which performs better. By showing different variations to similar segments of your audience, you can gather data on which version leads to higher conversion rates. This data-driven approach allows you to optimize your landing pages for specific goals, such as increasing leads, sales, or sign-ups. Testing key elements like headlines, calls to action, images, and forms helps you understand what resonates best with your target audience.

The process involves creating a “control” version (your original landing page) and a “variation” (the modified version). Traffic is then split between the two versions, and key metrics are tracked. These key metrics might include conversion rate, bounce rate, time spent on page, and click-through rate. After a statistically significant amount of data is collected, you can confidently determine which version is the winner and implement it as your new control.

Effective split testing requires a systematic approach. Start by identifying a clear goal and hypothesizing which changes might improve performance. Then, create variations that test those hypotheses. Finally, analyze the results and iterate based on your findings. Continuous split testing ensures your landing pages are constantly optimized for maximum effectiveness.

Tracking Statistical Significance

Statistical significance helps us determine if observed results are likely due to a real effect or just random chance. It’s crucial for drawing reliable conclusions from data. A key measure is the p-value, which represents the probability of observing the obtained results (or more extreme results) if there were truly no effect. A commonly used threshold for statistical significance is a p-value of 0.05. This means if the p-value is less than or equal to 0.05, we reject the null hypothesis (the assumption of no effect) and consider the results statistically significant.

Tracking statistical significance involves monitoring the p-value as data accumulates, especially in scenarios like A/B testing or clinical trials. It’s important to avoid prematurely concluding significance based on small sample sizes. Early positive results can be misleading due to random variation. As more data is collected, the p-value will fluctuate and ideally stabilize, providing a more reliable indication of a true effect. Confidence intervals are another valuable tool, providing a range of plausible values for the true effect size.

Several factors influence statistical significance, including the effect size (the magnitude of the difference or relationship being investigated), the sample size, and the variability within the data. A larger effect size, a larger sample size, or lower variability will generally increase the likelihood of achieving statistical significance. It’s important to remember that statistical significance doesn’t necessarily imply practical significance. A statistically significant result may not be meaningful or impactful in a real-world context.

Iterating Based on Results

Iteration based on results means that the execution of a loop (or any repetitive process) is influenced by the outcome of operations within the loop. Instead of iterating a fixed number of times, the process continues until a specific condition, determined by the results obtained during each iteration, is met. This condition could be the achievement of a desired outcome, the detection of an error, or reaching a certain threshold.

A common example is using a while loop to read data from a file until the end-of-file is reached. The loop continues to iterate as long as there’s data to be processed, and terminates when the end-of-file condition (the result of a read operation) is met. Other scenarios include searching for a specific item in a dataset, optimizing a model until a performance target is reached, or repeating a scientific experiment until a statistically significant result is observed. In these cases, the number of iterations is not predefined but dynamically determined by the results obtained during execution.

Key benefits of result-based iteration include efficiency (avoiding unnecessary iterations), flexibility (adapting to variable conditions), and improved control over the process. However, it requires careful design to ensure proper termination conditions and avoid infinite loops. The logic controlling the iteration must reliably detect the desired outcome or termination criteria.

Common Mistakes to Avoid

One of the most common mistakes people make is failing to plan ahead. Whether it’s a project, a trip, or even just a simple task, taking the time to plan and prepare can save you a lot of headaches down the road. This includes outlining steps, gathering necessary resources, and anticipating potential challenges. By addressing these factors upfront, you can significantly increase your chances of success and avoid costly rework or delays.

Another frequent error is poor communication. Misunderstandings can easily arise from unclear instructions, lack of feedback, or simply not listening effectively. Clear and concise communication is essential in any situation, whether it involves colleagues, clients, or personal relationships. Be sure to actively listen, express yourself clearly, and confirm that everyone is on the same page. This will prevent frustration and ensure everyone is working towards the same goal.

Finally, procrastination is a common pitfall that can lead to missed deadlines, subpar work, and increased stress. Putting things off until the last minute often results in rushed efforts and compromises in quality. To avoid this, prioritize tasks, break down large projects into smaller, manageable steps, and set realistic deadlines. By taking a proactive approach and tackling tasks efficiently, you can avoid the negative consequences of procrastination and achieve better results overall.

Tools for Efficient Testing

Efficient testing relies heavily on utilizing the right tools. Test management tools help organize test cases, track progress, and report results. Popular choices include Zephyr, TestRail, and Xray. Automation tools like Selenium, Appium, and Cypress can significantly speed up repetitive tests and improve coverage. Selecting the correct tools for your project depends on various factors such as budget, team expertise, and project requirements.

Beyond dedicated testing tools, integrating with other development tools can further enhance efficiency. Connecting with CI/CD pipelines allows for automated test execution as part of the build process. Utilizing bug tracking systems like Jira or Bugzilla ensures seamless defect tracking and resolution. Communication platforms like Slack or Microsoft Teams facilitate efficient collaboration amongst team members.

Finally, choosing the right combination of tools is crucial. Consider the specific needs of your project and team. Prioritize tools that offer seamless integration, support your chosen development methodologies, and ultimately contribute to faster feedback loops and higher quality software.